AI consciousness, qualia, and personhood.

A note on my current positions, in a FAQ format.

I’m attending a workshop on AI consciousness. Here are my uncorrupted thoughts prior to the workshop. I’m going to learn more, and I hope to contribute something in the future to clarify the cloudiness around AI consciousness, qualia, and personhood.

Can AI systems have consciousness?

Yes, I think it is possible to build AI systems to have consciousness.

While we haven’t pinned down exactly what it means, we will. Consciousness is related to information processing and representation, and substrate independent.

Is language required for consciousness?

No.

Does adding consciousness to an AI system have implications?

Adding consciousness will make an AI system more performant.

Not all architectures are compatible with implementing a consciousness loop. The performance implications depend more on the kind of world models the AI system has, than the consciousness loop itself.

Adding consciousness to simple world models will have almost no effect, whereas adding consciousness to complex human-like world models can have a significant difference in performance. N

Will AI systems feel the same ‘qualia’ as we feel?

Qualia is the ‘feel’ associated with a sensation or perception. Why does the 3D world feel like it does? Why does red feel like red and not like a bell?

For some modalities, AI systems will have qualia similar to ours. For example, perception of space could be where AI systems can have the same qualia, if it is implemented similar to that in animals. However, if spatial perception is implemented using LIDARs, they will have a very different qualia compared to ours.

For things like the feeling of taste or smell, qualia can be entirely different for an AI system. For pleasure and pain, quite a large chunk of our qualia comes from our physical embodiment and biochemistry. Those will be very different for AI systems.

Is consciousness the same as qualia?

While consciousness is required for qualia, not all conscious access might be associated with qualia. Moreover, qualia can be tied to the substrate — the feeling of how food tastes might be tied closely to our biological implementation.

Do LLMs feel pleasure and pain. Are they like ours?

No, LLMs do not feel pleasure and pain in the sense that we do.

Should we assign ‘personhood’ to AI systems?

First let’s consider what ‘personhood’ means to us.

1) We are unique and destructible. You cannot be resurrected if you die, at least not yet. And our lifetimes are finite and we roughly know the maximum amount of time we have1.

2) We are people because we have experiences and we remember them — childhood, growing up, teenage years, falling in love, enjoying sports and nature, getting sick, caring for others, loosing loved ones. Our personhood is intricately tied to this experience.

AIs do not intrinsically have these properties. They are not mortal. They can have many other characteristics of consciousness we might not have.

While it is possible for us to build AIs with some of the human constraints that give us personhood, I don’t see why we need to do that. We should lean on the advantages of the artificial system, rather than impose constraints that lead us to attribute personhood to it. We might build robots for role play and amusement (a la West World) by faking these constraints to give us an illusion of personhood, but those can be built in a way that doesn’t need to grant our robots personhood.

Does adding consciousness to an AI system have moral implications?

I think consciousness can be decoupled from feelings of pain and pleasure and suffering. I think it is possible to build systems that are conscious, but do not have pain and suffering. Moreover, since AI systems are not mortal, I don’t think they need to be considered as persons. So, I think it is possible to build conscious AI systems to serve us without any moral concerns that we are exploiting or torturing AIs.

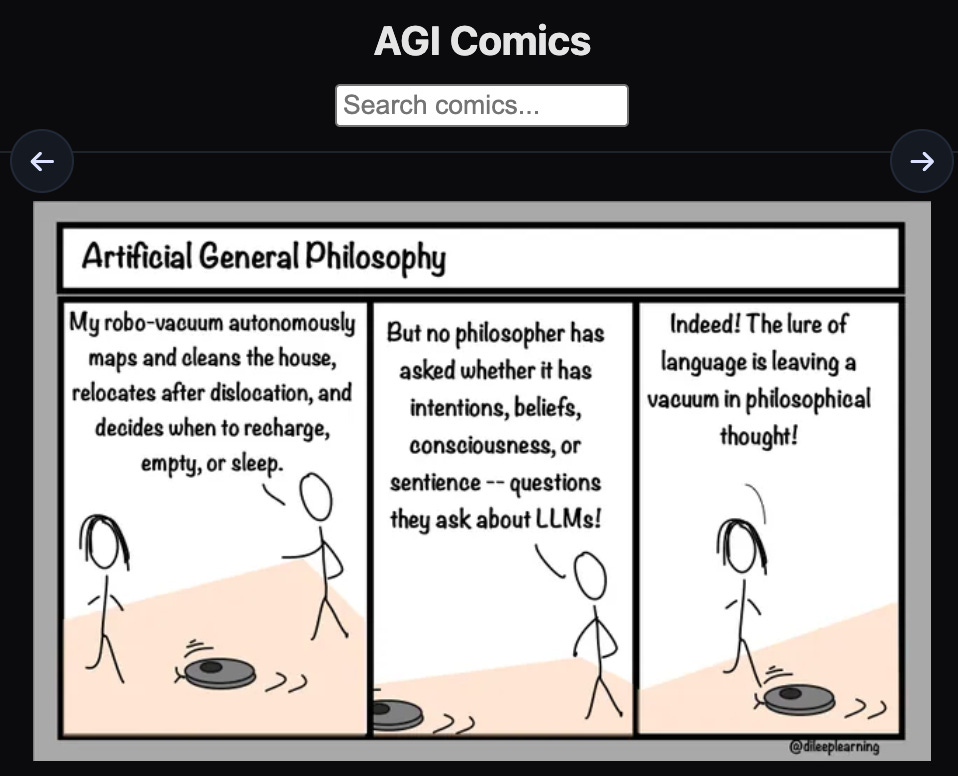

To end this note, here’s an AGI Comics on consciousness…

Yes, I’m aware of longevity research. When we get to the stage of living forever, we’ll also change what personhood means. Maybe after sometime we might want to just delete our neanderthal experiences, or reactivating it just might mean very different.

Computational functionalism doesn't necessarily imply consciousness. Chalmer's zombie arguments clearly show that under this view, it could be the case that you have indistinguishable behavior from a conscious entity, without any of the subjective experience. I never understood why the empirical evidence isn't taken more seriously: there is no system that we know of that we can safely assume to have conscious experience akin to ours that isn't made of stuff similar to us (i.e., other living organisms). I think the only kind of consciousness that matters is sentience, or what it feels like to be something.

I always love to bring this argument up when I talk to people (some variant of Searle's Chinese room argument, I must admit). Suppose you had an abacus and an infinitely long tape. This system is conceivably Turing complete. This means any computation could be carried out on it, including running an LLM. I don't think anyone would argue that the relevant parts of the system have anything like consciousness. Now, speed up the timescale of operations by orders of magnitude such that you get X tokens/second out of the system. It's now producing full-fledge language, and it has behavioral equivalence to humans on many aspects. Same system. Still not conscious.

I think that sentience is the only kind of consciousness that matters: what it feels like to be something. It's not clear why we would think that it feels like anything to be a really fast abacus more than we would think the same about a rock, or a piece of furniture.

Why are you so sure that consciousness is substrate independent? It seems like all evidence points to the contrary.