A critique of successor representations as a model of learning in the hippocampus

Successor representations (SR) is a popular, influential, and often cited model of place cells in the hippocampus. However, it is also one of the frequently misunderstood ideas — it is often attributed capabilities that it doesn’t have and cited in contexts where it should not be cited. Multiple review papers state that “place cell firing patterns can be simulated by a column of SR”, which gives the impression that SR is a model of place cell learning.

In every talk I have given there have been questions about SR, often misunderstanding what the model actually does, often using phrases like “predicting future states”, “not representing space, only relationships”, “encodes a state in terms of future states”, “predictive map”, etc. These are statements that can be made about our CSCG model too, but these models are entirely different with very different capabilities. This blog is an attempt to document my take that is often expressed too succinctly in the discussion sections of research papers.

I will make the following points:

Successor representations (SR) is NOT a model of place cell learning in the hippocampus. SR cannot explain how place fields are formed, or how hippocampus forms latent variables or ‘cognitive maps’.

Additional properties ascribed to SR, like “predictive states”, and ability to form hierarchies, are properties of simple Markov chains, and not a property of SR itself. As a predictor of next states SR is worse than the underlying Markov chain.

Some experiments in humans and animals report evidence for SR. On closer inspection, these experiments might NOT be providing strong evidence for SR, and are more likely evidence for model-based planning using a model like CSCG.

Caveat: While I quote papers to make the point, I don’t intend to say that these papers or experiments themselves are misleading. My intention is to counteract some misunderstandings that have formed over the years due to imprecise citation patterns. Our understanding has also improved over time, and that affords us more precision.

Why SR is not a model of learning cognitive maps or place cells in the hippocampus.

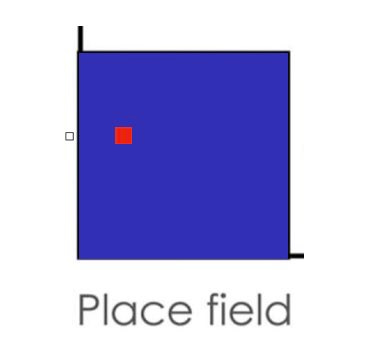

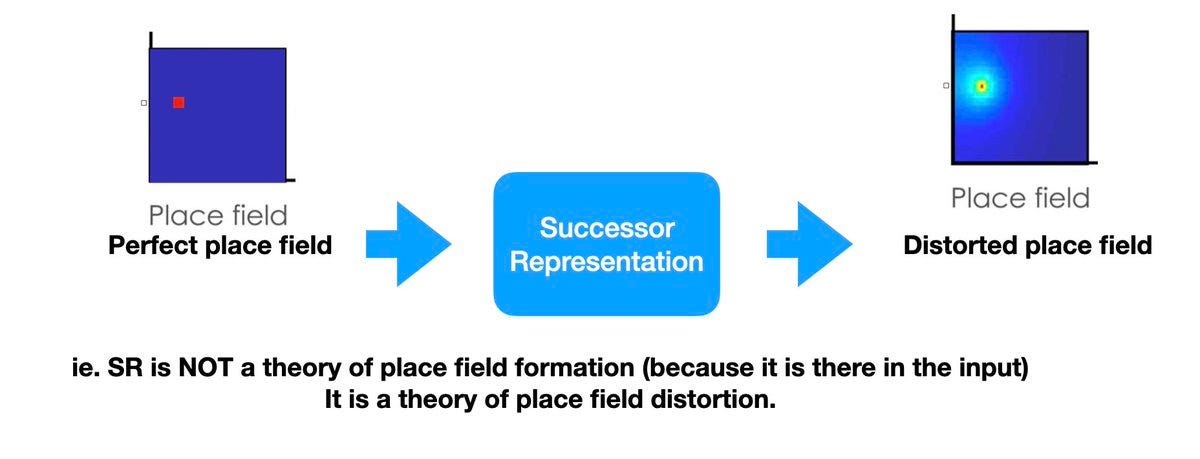

Let us start with the following figure from this excellent paper ‘The successor representation, its computational logic’, from Sam Gershman. On the right is the SR matrix M(states(t), states(t+1)). The figure is showing that if you reshape a column of this matrix, you get a place field.

If learning is what populates the SR matrix, and the columns of the SR matrix corresponds to place cells, doesn’t SR explain how place cells are learned? To the surprise of many, the answer is No!

To see this, we need to look at what was the input to SR learning is. The input to SR at times t, t+1, …, are the vectors states(t), states(t+1). You can think of each input as a column vector. Let’s take the input to SR at time instant t, states(t), and reshape it, just like we reshaped a column of the SR matrix. If you do that, you get the following:

And what is that? It is a perfect place field! So, the input to the SR, at every time step, is a perfect place field that precisely indicates where the agent/animal currently is!

SR cannot be an explanation for place field learning because the inputs to SR are perfect place fields already. (It could, if anything at all, be a theory of how place fields can distort given perfect place fields). How did the perfect place field get formed in the first place? SR offers no explanation for it.

Note that this is very different from CSCG — the inputs to CSCG are not locations, but sensory inputs that do not directly correspond to locations. In CSCG, location and head direction are induced as a latent variables, whereas SR requires locations to be directly sensed.

The above sentence in the introduction of the popular Stachenfeld et al 2016 paper could be a reason for misinterpretation — it says that “according to SR place cells do not encode place per se but rather a predictive representation of future states given current state”. What is left unsaid until later is that what they call a state is precisely a location or a place cell, and that too because it is directly sensed.

Many properties attributed to SR are just the properties of the adjacency matrix/Markov chain.

If at every time instant what you sense is the unique location, then you can directly set up a Markov chain over those locations and obtain the same properties ascribed to SRs. Learning an adjacency matrix. — which location is adjacent to which location — is not a hard problem. It is just a Markov chain, you can just populate it with the transitions that are observed. The edges of that Markov graph can be annotated by the actions, and this Markov graph has all the info needed for navigation.

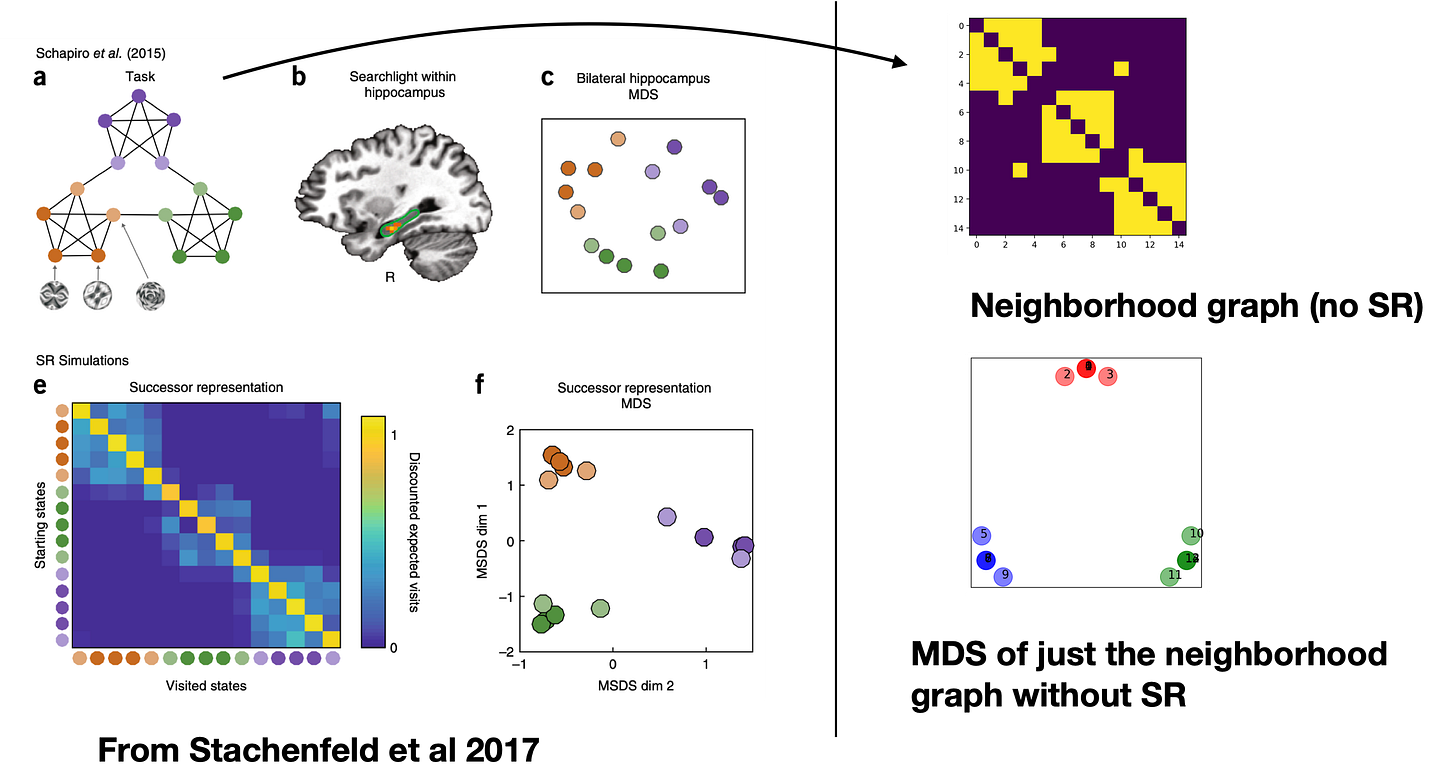

Note that any of the generic statements made about SR — ‘predictive representation’, ‘encodes temporal relationships’, ‘columns represent place cells’ — are true of this simple Markov chain too. In fact many of the properties attributed to SR — formation of hierarchies using community structure, for example — are just the properties of the underlying Markov chain without any SR. Navigation computations on the Markov graph are not expensive at all — you can use the Dijkstra algorithm for computing the shortest path, for example.

As another example, the fact that nodes in a neighborhood community of a graph cluster together when visualized in multi dimensional scaling is not a property of the SR — it is the property of the underlying Markov chain, as shown in the figure above.

SR is not modeling long-term temporal dependencies and is less predictive than the Markov chain: You can think of SR as taking in the adjacency Markov chain, and then ‘blurring’ it. It introduces more edges to the graph — a node is now not just connected to its neighbor, but also the neighbor’s neighbor, and neighbor’s neighbor’s neighbor. Sometimes this is confused with modeling ‘long-term’ temporal dependencies, but it is actually not. The addition of the extra connections did not increase the predictive power — it decreased it. This is because, after the blurring, the a node cannot distinguish between its neighbor and neighbor’s neighbor. The Markov chain representing the adjacency matrix is a better ‘predictive map’ than SR. The SR matrix will perform worse in prediction compared to the simpler Markov chain.

Eigen vectors and grid cells?: Another property attributed to SR is that its eigenvectors look like grid cells. But this is also just the property of the neighborhood graph, no SR needed. Shown below are first 20 eigenvectors of the graph of a room with a ‘hole’ in it.

(Here’s a simple argument on why this grid cell idea does not make intuitive sense to me: To derive this representation, it requires representing the ‘hole’ in as much detail as the walkable areas of the room.)

Questioning the ethology of SR assumption.

SR is motivated using computational complexity of an RL task — maximizing rewards when multiple states in the environment lead to rewards. For example, if different amounts of cheese are available at different locations in the environment as in the figure below (from Stachenfeld et al 2016), the RL problem is about having an optimal policy to obtain the rewards.

Here are my questions on this:

Is there any evidence that animals can optimize behavior in such settings where there are multiple rewards?

How are these rewarding states even communicated to the animal when the animal cannot see all the states? Seems like a hard problem.

Basically, I’m questioning the computational optimization premise behind SR — I’m asking whether animals/humans actually solve the RL problem that is taken as the assumption for motivating SR.

Navigating to a single goal location, or even chaining a couple of them, is not computationally inefficient in a model-based setting, but SR assumes that this is not sufficient and that the RL problem needs to be solved in the more general setting, the ethology of which seems questionable to me.

What about evidence for SR in humans?

The paper below (Mommenejad et al 2017) is often cited as evidence for SR in humans. It is definitely a very impressive and fascinating behavioral experiment in humans, but on closely reading the paper I am left with the impression that the paper is actually providing strong evidence for model-based reasoning in humans, and providing only weak support for SR.

Let’s look at Fig 5 of the paper, which provides the human experimental data overlayed on predictions from “Model-based”, and “SR”, for two tasks, let’s just call them “gray” and “red” for now. The gray and red bars in these plots are the theoretical predictions, the little dots with error bars are what is observed from humans. I have annotated the plot to make this easy to see.

The impression these plots give me is that 1) Model-based reasoning (“pure MB”) is a pretty good fit to what is actually observed in humans, and 2) Successor Representations (“pure SR”) is a bad fit for what is observed in humans.

"“Pure MB” — It is such a close fit, and SR is not predictive at all of how humans behave for the “red” condition. In fact, this plot seems to provide strong evidence against SR.

But that is not what the paper concluded. Here is the entire figure 5.

The paper utilizes the minor discrepancy between Pure-MB performance and human performance to argue for a hybrid model that post-hoc combines SR with MB, without actually giving a method for how this combination would be achieved by an animal. This post-hoc hybrid SR-MB is then what is cited as evidence for SR in humans.

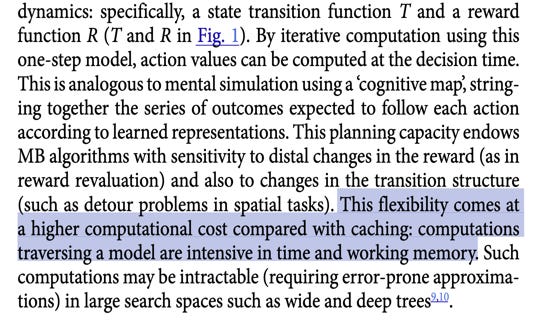

The hybrid SR-MB does not make sense to me. The MB method has all the information to outperform SR in both the gray and red conditions. The motivation for SR rests on the idea that the MB computations are expensive, as described in the introduction of the paper.

However, running an SR-MB combination used in the paper (a linear combination of SR and MB) defeats the efficiency argument — SR-MB combination requires running both SR and MB, which is more expensive than running MB alone. The paper says there are other ways to combine them, but what was used for the fit was a linear combination that doesn’t make computational or ethological sense.

Latent-state formation could be an alternative explanation:

Could there be another explanation for the minor discrepancy between MB performance and the reported human performance? I think so. Interestingly, the alternative explanation is based on the idea of latent variables.

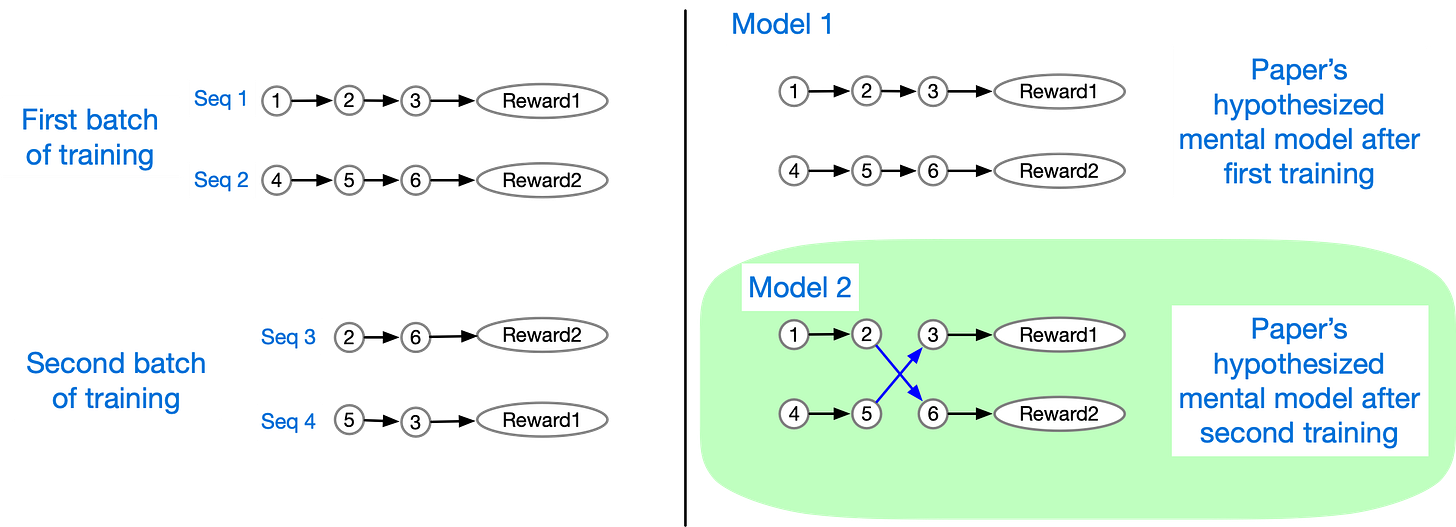

Consider the ‘red’ task, ‘transition revaluation’:

Humans are first trained to memorize two 3-step sequences.

Seq1: 1→2→3→Reward1,

Seq2: 4→5→6→Reward2

After they learn seq1 and seq2, they are trained with two two-step sequences.

Seq3: 2 → 6 → Reward2

Seq4: 5 → 3 → Reward1

Now, here’s the crux: How would humans interpret the second set of training sequences? What mental model do they build after the training is complete?

In the paper's interpretation, the participants will learn Model-1 at the end of the first batch of training that exposed them to seq1 & seq2. And according to the paper, the participants will consider the second set of sequences (seq3 & seq4) as edits of Model-1, transition-revaluation, leading to the participants to change their mental model to the highlighted Model-2 in the figure above. The blue arrows are the edits.

But that is not the only interpretation humans can make. Here is another interpretation.

If the sequence starts at 1, then the next steps are 2→3→ Reward1. But if the sequence starts at 2, with no preceding context then the next steps are 6→Reward 2.

Interestingly, SR has no mechanism to represent such a contingency, but humans and animals actually can! Also, it is trivial to represent this in CSCG, as shown below:

Without additional information or constraints, there is really not much to select between these two interpretations. Some humans might modify the existing Model 1 and treat this as transition-revaluation, but some other humans might think of it as a new set of latent variables.

I think this ambiguity in the task design is a potential alternative explanation for the observed phenomena. The observations in that paper raise interesting questions given what we now know about the ability of hippocampus to create latent variables.

What about successor representations in animals?

Cothi et al did an ingenious experiment on both rats and humans to test for navigation under changing environments. The experiment is extremely interesting, and I highly recommend reading the paper. In my reading of the paper, the performance of the agent, in terms of how well it achieves the task, seems to best explained by model-based planning, not SR, but that is not the impression you’d get if you just scan the abstract.

The experiment tested the ability of rats and humans to navigate an environment and reach a goal that was initially trained in an open arena, but then tested in 25 different maze configurations that removed blocks to create barriers. The goal location remained the same. Each configuration was tested for 10 trials.

Shown below are the performance graphs, for rats, humans and RL agents.

Here are my observations from the above graphs:

Panel A: SR significantly under-performs compared to humans, very much so in the early parts of the trial.

Panel A: Human performance is very close to model-based reasoning “MB”.

Panel B: SR under-performs rats, and catches up to rat performance only by trial 10.

Panel B: Ideal MB performance is better than that of rats performance.

However, the paper seems to emphasize some aspect of the trajectories taken by the agents to find that SR is most similar — see plots below. However, even there, in the first 5 trials model-based planning is more similar to humans and rats compared to SR.

The paper argues that as the agent gains more experience, it makes sense that it switches to SR because it is less computationally demanding. But, it is just as easy to cache the plans that were derived earlier, and replan only when the the actions of that plan are not executable. In the MB implementation in the paper, the agent replans after every step — but replanning could be done intermittently. Many of these variations of model-based planning could make it even more of a better fit for the observed behavior.

Also, some of the reasonings given in favor of SR in the paper seem to be incorrect. when the environment changes, the SR’s biases are likely to hurt rather than help. In fact, this is reflected in the performance — SR significantly underperforms MB, and the animal/human in the first 5 trials.

What could be the reason for humans and animals under-performing the ideal mode-based planning? My guess: partial observability of the environments. Humans and animals in the experiment received visual sensory inputs, not location indices as inputs. The simulated ideal models received actual location indices as inputs! Therefore it should not be surprising that humans and animals under-perform this ideal model.

This brings up another argument why these experiments should be considered as evidence against SR: The SR model, which receives ideal location-indices as inputs, is under-performing rats that receive only visual sensory inputs!

It is clear that this paper raises a lot of interesting questions with an ingenious set of experiments, and I hope there will be more follow up studies. My overall take is that the experiments are not showing strong evidence for SR, but showing evidence for model-based planning.

Conclusion

I hope I have provided sufficient arguments and evidence for the following:

Successor representations (SR) is NOT a model of place cell learning in the hippocampus. SR cannot explain how place fields are formed.

Additional properties ascribed to SR, like “predictive states”, and ability to form hierarchies, are properties of simple Markov chains, and not a property of SR itself.

The experiments in humans and animals that report evidence for SR might NOT be providing strong evidence for SR, and are more likely evidence for model-based planning.

Acknowledgement

Thanks to Miguel Lázaro-Gredilla for feedback on early drafts of this blog.

Very interesting. How does understanding how the brain represent space inform a better understanding to create AGI? Also, I give credit to a lot your work in my research & understanding on AI, Dr George :)