Space is a sensory-motor sequence in the hippocampus

A guide for cognitive/neuro scientists for reinterpreting space and cognitive maps

Recently we published a paper in Science Advances titled Space is a latent sequence: A theory of the hippocampus. I believe this paper offers a view of the hippocampus that is drastically different from the space-centric view. This blog is a companion to the paper, and includes some visualizations and animations that make the ideas easier to digest. I believe anyone who’s interested in the hippocampus will benefit from reading this.

Starter code: A Google colab Jupyter notebook is available with starter code. You can run it from the browser without installing anything, and it takes less than 5 minutes to train a basic model and see how it works. Click here for the Jupyter notebook.

The traditional view: Space-centric

Place cells — neurons that respond to locations — is a robust phenomenon that is a striking property of the hippocampus, and the currently dominant paradigm of hippocampus is of representing space in Euclidean terms. Hippocampus neuron activations are often visualized as place fields.

However, anomalies to this basic story have been accumulating with more a more new and divergent phenomena getting discovered. These are then explained by invoking new concepts and new types of cells, without a unifying principle connecting them all. Alternative views that look at hippocampus as a sequence model has been emerging. See this paper for example, and many more are cited in our paper. Although these views pointed in the right direction, they were not yet brought to a concrete model that can resolve how space gets represented as time, and how that can resolve many of the mysterious phenomena. Read the recent review ‘Remapping revisited’ to see how confusing the picture is.

What we show in our paper is how these anomalies get resolved when we stop thinking of hippocampus in spatial/Euclidean terms, and instead think of space/place as a sequence. In the next few sections, I try to explain these ideas using some visualizations that make it easier to understand.

The problem that hippocampus has to solve

Humans and other mammals do not have a GPS or compass, & cannot sense location coordinates. Our ideas about space & locations have to arise from our sensory-motor experience. ( Or hard-coded a-priori à la Kant, more on this in a later section.)

The trouble is that sensations + actions do not have a one-to-one correspondence with locations. Due to perceptual aliasing, identical sensations can occur at different locations, and the same location can be approached via different sensory-motor sequences. The idea of space has to arise from this sequence of of aliased observations that do not convey locations directly. But how?

Interestingly, our model, clone-structured causal graphs (CSCG) can do this exact thing. Given a sequence of aliased egocentric sensory-motor inputs, it can induce a latent graph that corresponds to the generative model of the environment as the agent experienced it. This also works in 3D environments with rich high-dimensional visual sensations as the input, and on arbitrary non-Euclidean topologies.

How CSCG represents different sequential contexts in a latent graph

Shown on the left in the figure below is the top down view of a 3D environment, with three action sequences marked in orange, green, and purple. These action sequences generate visual observation sequences A→D→E (purple), B→ D→ F (green), and C→ D→G (orange). The visual observation D repeats in all three sequences. In the green and purple sequences, D occurs in the same location, even though they occur in different sequential contexts, but the D in C→D→ G (orange) is in a different location.

The latent space representation of CSCG has multiple states that are “clones” of the same observation. These clones can be used to represent the different (latent) temporal contexts that an observation can occur in, and has the flexibility to either merge or split these contexts. For example, the D in the purple and green sequences need to be merged together into the same latent clone even though they occur in different contexts, where as the D in the orange sequence needs to be kept in a different latent clone. Both these clones are connected to the same instantaneous observation D, but they will activate in different temporal contexts based on the clones that connect to them.

There are two types of connections in CSCG. 1) `Lateral’/temporal/transition connections: These are the connections between the latent clones, and same as the weighted edges of the latent transition graph. 2) ‘Bottom-up’ connections: These are the connections from observation to the clones. Each observation’s activation strength is the `bottom-up’ input into each of its clones. The overall activation of a clone is determined by combining the bottom-up input with the lateral input.

Shown below is a visualization of the latent clone structured graph and how a learned graph corresponds to the topology of the environment. CSCG is initialized by allocating a clone-capacity — the number of clones for each observation. Typically over-allocating the capacity works better for learning. More details about the learning dynamics is discussed in the paper, but for now let’s understand what this graph represents. This is shown in the figure below.

Keep in mind that what is learned is a graph. We can see the correspondence of that graph with the environment when it is visualized in 2D, but the 2D layout is something we impose externally. The correspondence to the learned environment is not obvious when the graph is plotted out in the ‘clone-structured’ layout.

Place fields: How sequence neurons get interpreted as responding to locations

Which observation does each of the clones in the graph respond to? That is easy: The clones are colored by the observation neuron that is connected to them. For example, the gray colored neurons respond to gray color. Importantly though, they respond to the gray color only when it occurs in specific sequential contexts. This is visualized below.

So far, we have been discussing only latent graphs and sensation sequences. We haven’t invoked anything about space, geometry, location etc. The clone neurons in our graph respond to specific sequences. Then how does this get interpreted as responding to locations? Let’s do what is done in neuroscience: plot the “place field” of a clone neuron. We’ll see that plotting the place field is what injects the interpretation of location/space into the neural responses.

To plot the place field we do the following:

Insert a probe into the rat’s brain to record from the neuron of interest.

Make a 2D plot that is the same shape as the room

Let the rat run around in the room, while we observe the neuron and rat’s location simultaneously.

When the neuron fires, we look at the rat’s location at that instant. We add the firing strength of the neuron to that location in the 2D plot, and accumulate this over time.

These steps are visualized in the figure above, and the place field of the marked neuron comes out as shown.

Note that the decisions to make a 2D plot in the shape of the room, and to overlay neural responses based on rat’s location were made by the experimenter. The animal itself has no mechanism to plot a place field (because plotting it requires knowing the ground-truth location), and it has no use of plotting one either.

The decision to make a 2D plot in the shape of the room and to overlay neural responses based on rat’s location was made by the experimenter. The animal itself has no mechanism to plot a place field, and it has no use of plotting one either.

Place fields make us think the neuron is responding to location: If you plot the place fields of the different clones above, you will see that each neuron responds at a different location, and they systematically cover all the locations in the room. This makes us think that these neurons are responding to locations.

But in reality, the neurons are not responding to locations. They are responding to the sequence of sensory observations that lead to the location.

Crucial distinction: A neuron responding to the sequence of sensory inputs leading to a location is not the same as the neuron responding to the location. Mixing these up is the source of a lot of confusions related to place-field remapping, as we will see below.

A neuron responding to the sequence of sensory inputs leading to a location is not the same as the neuron responding to that location. Mixing these up is the source of a lot of confusions related to place-field remapping.

Explaining geometric determinants of remapping without space or geometry!

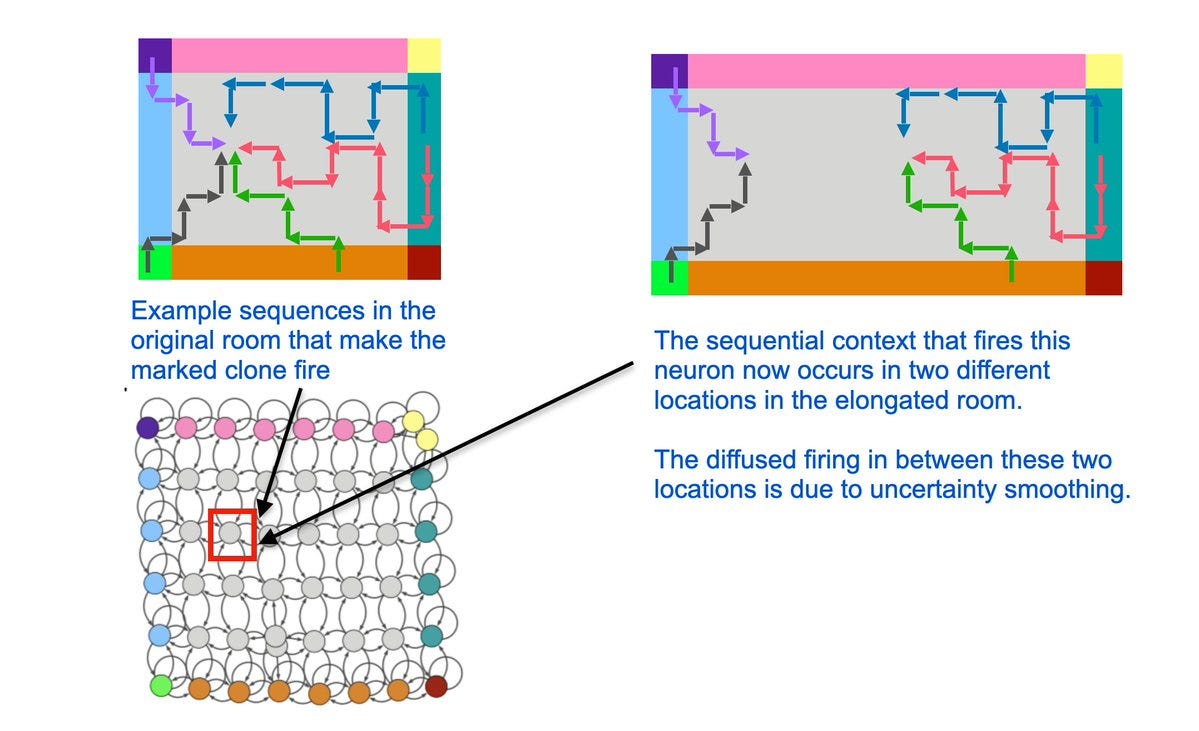

Consider this question: What will happen to the place field of the neuron above if we train the rat in the original room, but test the rat in an elongated room when we are plotting the place field?

Since we know how to plot place fields, and since we have a model, we can run this experiment directly, as visualized below.

We just repeated the same process for plotting the place field, this time making a larger 2D plot corresponding to the shape of the elongated room, and marking and accumulating the neuron’s response on to the current location of the rat in the elongated room.

We see that the original place field ‘remapped’ into a bi-modal place field in the elongated room!

In fact, we just reproduced a classic finding from a well-known paper called ‘Geometric determinants of the place fields of hippocampal neurons’, but in CSCG the remapping occurred without having to use any geometrical concepts at all!

’Why does this remapping happen? This is easy to understand using CSCG, as shown in the figure below. In the elongated room the sequential contexts that fired the neuron in a single location in the original room now occur in two different locations in the elongated room. Remember that the neuron was never responding to locations — it was always responding to the sequence of observations.

Prior models to explain this phenomena relied on geometric concepts, like the Boundary-vector-cell model, which, as you can guess, relied on ideas of cells coding vector distances from the boundary of the room. But that model could not explain the direction-dependence of the remapping, the result of another ingenious experiment by O’Keefe and Burgess. They asked: “What if we plot separate place fields, one while the rat is running to the left, and another while the rat is running to the right?”. Well, you can read off the answer from the figure above. When the rat is running to the right, the left lobe of the place field will be active, and when the rat is running to the left, the right lobe of the place field will be active — this is what they observed, and also reproduced readily from CSCG.

Another key thing to note is that, in this case, no connections in the model changed, even though the place field changed dramatically. Although the place field now looks very different, the model has remained exactly the same. The opposite can also be true: there are cases when the model’s connectivity can change, and the animal’s behavior changes accordingly, but the place field can remain largely intact. As we stated earlier, these phenomena look puzzling only when we look at hippocampus neurons as encoding location, and our paper explains how these get resolved.

If you understood the mechanics of how the above remapping happens, you are in position to understand a vast array of phenomena in the hippocampus. Here’s the full list of phenomena we considered and explained in the paper. CSCG can explain many more, but we had to be selective given length constraints.

Place field expansion, distortion, and repetition

In large empty rooms place cells in the center of the room have a larger place fields compared to those in the periphery, and the place cells along the edges of the room have elongated place fields. When we train rats in two identical rooms that face in the same direction and are connected by a corridor, place fields often repeat in these rooms, in the same relative positions. How does CSCG explain these phenomena?

As the size of an empty room increases, the aliasing of the observed sensory sequences increase in the center of the room. This results in CSCG being not be able to learn a perfect graph — the graph quality will be degraded in the middle of the room in the sense that it will not resolve many of the aliased sensations into different clones. The degraded graph is still useful, but will have ‘lower resolution’ in the center. If you plot the place fields of the clones in the middle, just like how we did earlier, they will show an expanded field compared to the neurons that represent the corner or the periphery. In the periphery, aliasing will be elongated along the edge where identical observations happen, resulting in elongated place fields.

A similar thing happens when two identical rooms are connected by an undifferentiated corridor. Learning often doesn’t recover the two rooms then, recovering only a graph like the one shown above. This will cause the place field to repeat in the identical rooms, in same relative positions.

Place field repetition in two identical rooms, and place field expansion in the center of an empty room happen for the same reason. Imperfect learning that result in aliased latent graphs. Expansion is same as repetition, just happening locally.

CSCG schemas for structure transfer

Although we didn’t cover this in detail in our paper, it is worth knowing how CSCG latent graphs can be used as schemas.

The idea is quite simple, and is visualized above. The nodes in the latent graph are mapped to observations via the ‘emission matrix’. For example, all the green clones in the graph on the left is connected to the green observation, and all those clones represent the green floor of the room. However, we can disconnect the specific observation mapping that was learned in a particular room. What we are left with is a latent graph with ‘slots’ in them.

If the agent now encounters a new room with similar structure, but different appearance, it can re-use the structure and just relearn the mappings of the latent nodes to observations.

You can think of the original graph that was learned for a specific room as a ‘grounded graph’ — the nodes are colored by the observation mappings. If you remove this coloring, then what is left is a ‘schema’, or an ‘ungrounded graph’, but we can learn a new color-map for this graph in a new environment by ‘binding’ the ungrounded nodes. This learning process just needs adapting the emission matrix while the transition matrix is held fixed — this learning is extremely fast.

CSCG schemas are structural abstractions and they enable transferring structural knowledge from prior experience to deal with new environments.

CSCG schemas are structural abstractions and they enable transferring structural knowledge from prior experience to deal with new environments. In general, animals would be using a library of such prior knowledge schemas, rather than learning from scratch every time. These ideas are developed further in two of our papers on 1) ‘Graph schemas for transfer learning’, and 2) `Schema-learning and rebinding as explanation for in-context learning’ (NeurIPS 23 spotlight). The idea of learning latent graphs and using those for task-transfer generalizes to other domains — for example, you could feed the model word tokens instead of sensory observations. Learning the structure of a sentence or an algorithm is in many ways similar to learning the structure of a room.

Planning and replay

As I mentioned earlier, the animal has no need for decoding locations or calculating place fields. Any navigation related decision the animal needs to make can be done in terms of the latent states without having to ever invoke ideas of space and location. Here is a visualization:

One core advantage of the CSCG is that the latent representation is an explicit graph structure. This makes planning is easy and efficient, and makes it easy to self-modify the model in response to environmental changes and replan. The latent graph can also be used as schemas to efficiently transfer structural knowledge.

One core advantage of the CSCG is that the latent representation is in an explicit graph structure, which makes planning is easy and efficient. It is also easy to self-modify the model in response to environmental changes and re-plan. Combined with the schemas , this offers CSCG a way to plan shortcuts through unvisited regions in novel environments by transferring structural knowledge.

Schemas and planning+re-planning gives CSCG some interesting properties that are also observed in animals. While encountering a new environment, the agent can utilized previously learned schemas to rapidly learn the environment. Moreover, the agent can take shortcuts in the new environment, and these shortcuts can be through portions of the environment that the agent hasn’t visited yet, as shown in the figure below.

As humans we frequently encounter such scenarios. Imagine walking in the 3rd floor of an office building after experiencing the second floor that also has the same layout. Although the 3rd floor has the same geometric layout, the decor, furniture, color scheme etc. could be very different from the 2nd floor. You can still plan paths through unvisited sections in the 3rd floor using your knowledge of the 2nd floor, but you would not be able to predict what objects or colors you’d encounter on the paths. These are examples of planning using schema-like abstract representations.

The following figure visualizes replay-like message passing for planning and replanning while using a schema to navigate a new environment that has a similar (but not identical) layout.

What about grid cells?

The picture consistent with CSCG is that grid cells are a complementary input — you could consider them as another modality that inputs self-motion information. This information is helpful, and can augment visual information, especially in uniform open arenas. The periodic tiling provided by grid cells is useful in reducing the aliasing of sensory inputs.

The interaction between grid cells and CSCG will be bidirectional — if the CSCG is trained with a particular phase of the grid cells, the latent representations can help the grid cells align with that familiar phase when the animal returns to that environment.

This combined model is yet to be developed and demonstrated, but CSCG is consistent with recent neuroscience experiments showing that grid cells are not necessary for place cell function. I highly recommend watching this video.

Other models, future work etc.

The paper has a lengthy discussion on connections to other theories of the hippocampus, but I plan to write a blog that will expand on these. I also plan to write more about the directions in which our research can be extended. If you are interested in these topics, consider subscribing:

Great ideas! Your ideas as HC as a sequence of sensory perception learning makes so much more sense.

I have a question regarding HC in humans which I suppose handle learning sensory as well as more abstract "features" sequences. I'm also assuming intelligence as repurposing navigation capabilities in a mental representations hyperspace, and problem solving as finding a mental "way" to an abstact "target".

In your paper you give the HC planning and schema transfer capabilites ie, requirements for general intelligence. In patient H.M specifically, even without HC, intelligence was normal (in 1967 milner paper, they even saw arithmetic capabities improvement) so he was able to use previously learned sequence of abstract representations to solve daily tasks . How do you think he was able to achieve it without an HC ?

Absolutely lovely visuals and explanations. I've thought a lot about this too, but I'm glad someone has tackled it more thoroughly.

To turn sequence learning into a map, does the agent require exhaustive exploration of the environment, or can it somehow extrapolate or predict its destination if it, say, takes a short cut? I always struggled with this missing component from the discussions at Numenta and have been working on trying to find a solution.

Not all input spaces can be exhaustively explored like the rate maze, so we would need some form of vector addition or cognitive space interpolation to navigate complex environments. Vectors don't seem to exist in the brain like we hope (directions and magnitudes do), so the tools like sequence learning, perceptual clones, place cells, and grid cells need to be combined somehow to enable this spatial interpolation.