How to get a million bucks: quick thoughts on cognitive programs and the ARC challenge.

The abstract reasoning corpus (ARC) challenge by Francois Chollet has gained renewed attention due to the 1M prize announcement. This challenge is interesting to me because the idea of “abstraction” as “synthesizing cognitive programs” is something my team has worked on and published, even before ARC was popularized. Because of this background, I think I have some insights about ARC that might be missed in a casual examination.

Concepts as cognitive programs

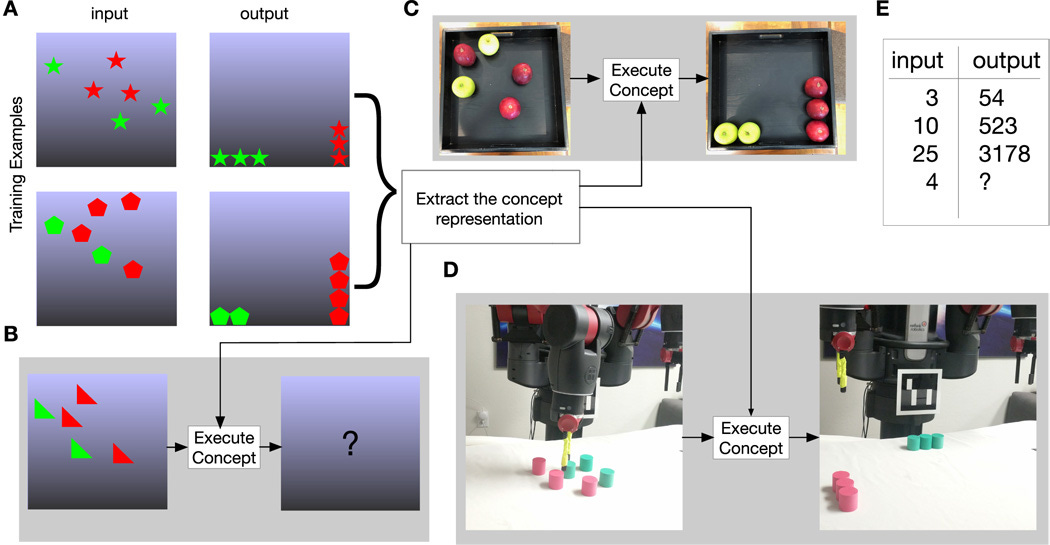

Let’s start by looking at Figure 1 of our paper (https://www.science.org/doi/10.1126/scirobotics.aav3150). You can see that ARC’s premise is the same — (A) from input-output image examples, infer the abstract concept that is conveyed. The concept can then be applied to a new image to create an answer image (B). Our setup went one step further, on being able to transfer this concept to real-images (C) and even being able to execute that concept on a robot (D).

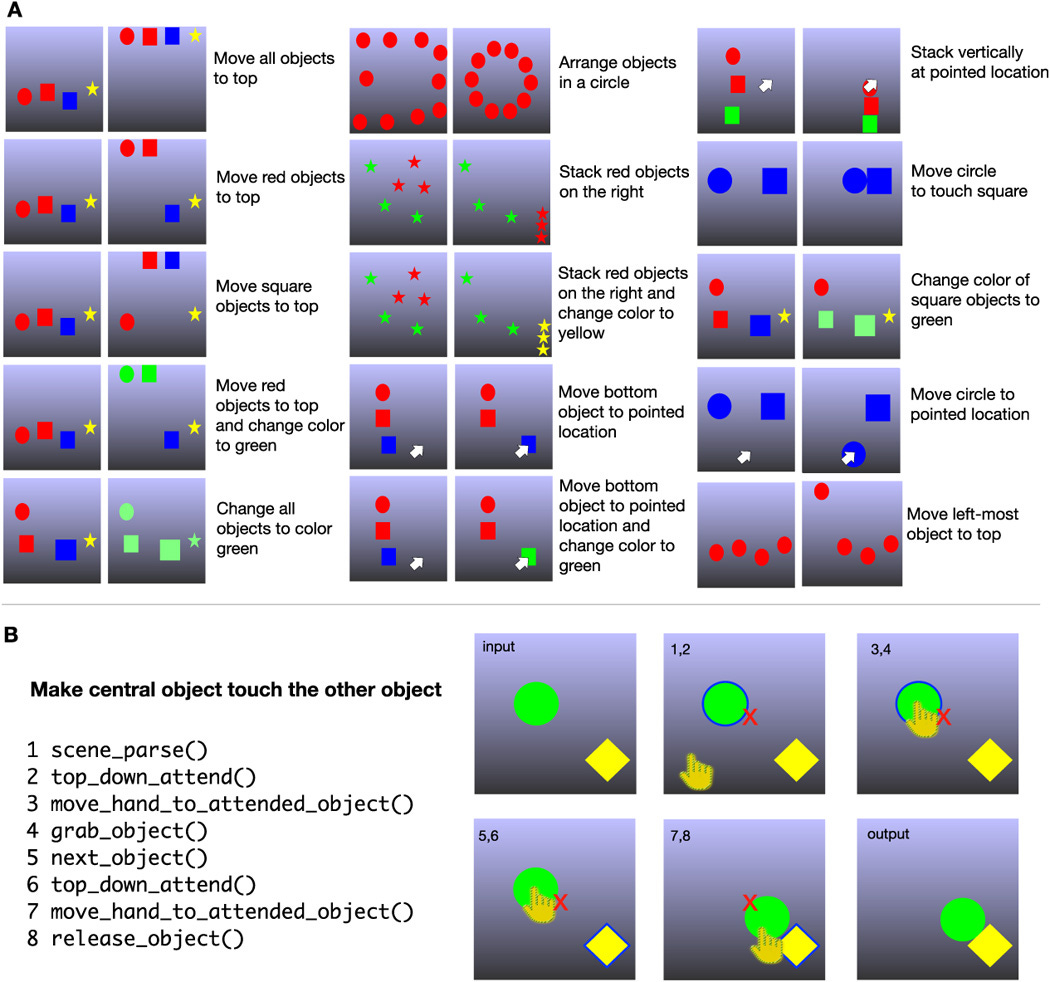

We called this conceptual world “Tabletop world” (TW). More examples of concepts from the tabletop world are shown below, along with an induced program to solve a concept.

Some concepts in ARC are almost identical to the ones in the tabletop world, like the examples below. However, despite the similarity, there is a core difference between tabletop world and ARC: ARC is not systematic.

“Tabletop world” (TW) is systematic, ARC is not.

While the abstract images used in ARC has many similarities to our ‘cognitive programs’ work, one crucial difference is that TW is systematic and ARC is not. By systematic, I mean that the concepts are derived from a physically consistent simplified world that is a subset of the real world. Let me explain:

All the ‘abstract’ examples in TW are generated from a systematic domain:

Objects are on a table top. What is seen is the top-down view. Objects are 2D. They obey simple laws of object interactions.

All the conceptual manipulations are achieved by sliding the objects along the tabletop, without lifting. Objects do not occlude each other.

Some manipulations involve imagining objects that are not present, and sliding other objects in reference to this imagined object.

While an infinite number of concepts can be generated in the TW domain (everything in TW should be an ARC challenge), the domain is still closed. One cannot generate arbitrary concepts in this domain. Here is a relevant passage from our paper about this:

In contrast, ARC is not generated from a systematic domain like this that is a proper subset of the real world. The nature of ARC seems to be “whatever Chollet could think about and create in a reasonable amount of time”. The open-world nature of it creates significant challenges for attacking it, especially using the idea of “concepts are programs”. To understand this, let’s examine the core ideas behind how we tackled the TW concepts.

Programs on a “Visual Cognitive Computer” with an embedded world model.

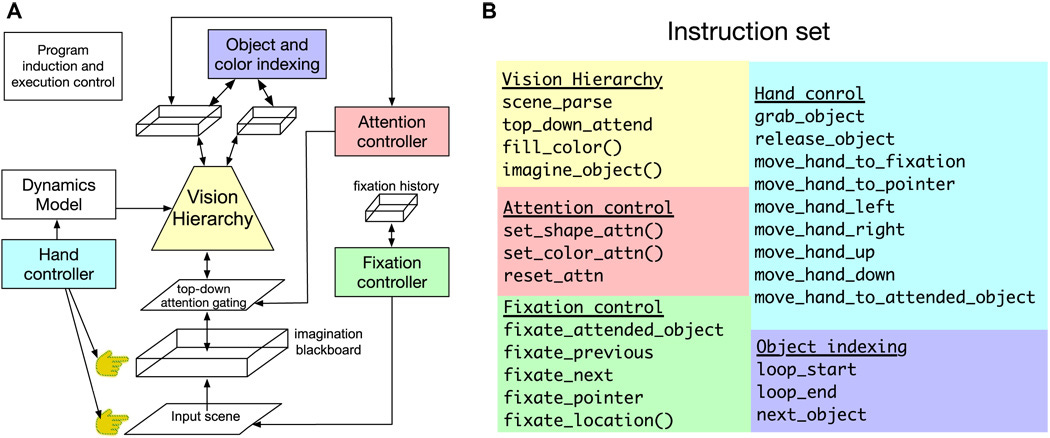

If concepts are programs, then they need to run on a “computer”. This needs two things — the computer architecture, and the instruction set.

If you look carefully at the figure below, you’ll see that the computer architecture has a world model embedded in it. This world model consists of a vision hierarchy, a dynamics model, and controllers for hand, fixation, and top-down attention.

The instruction set specifies how this world model is controlled internally for running simulations.

Those are two key ideas:

Having a controllable world model

Being able to run mental simulations using this world model.

The instruction set of this computer is just the primitives for controlling the world model.

If ARC is to be solved using ‘concepts as programs’, then any solution to the ARC will have to have an architecture similar to this that incorporates a controllable world model. The details might vary, but the we think we identified some of the core components of this architecture and some of the core operations in the world model.

One key aspect of this world model is that the the vision hierarchy (VH) is bidirectional, with tight coupling between the recognition pathway and the imagination pathway, allowing it to simulate the (simplified) world dynamics in a factorized and context-appropriate manner. We elaborated on this further in our paper:

For the tabletop world, it was reasonably easy to specify an overall cognitive architecture like this. For ARC, since it is not very systematic, specifying this architecture and instruction set becomes hard, and somewhat arbitrary.

Which problems are unsolvable because the vision hierarchy used does not have the correct operations in it? Which problems are unsolvable because our program induction and transfer methods are not working well? Which problems require elementary school math? These kinds of things becomes harder to debug when the domain is not systematic.

Pure reason you Kan’t

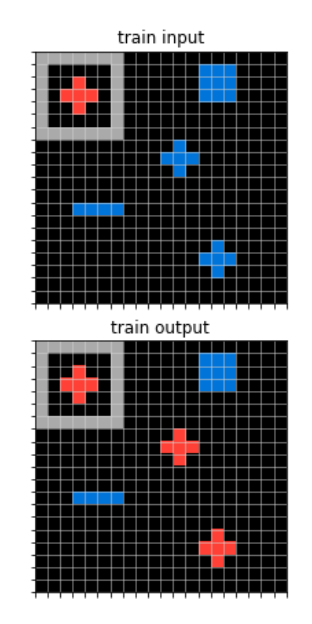

Although ARC is supposed to be a challenge for ‘abstract reasoning’, many of the challenges piggy-back on the generalization provided by the perceptual system. Consider this one:

What is the ‘reason’ for filling in the those specific red pixels? It looks reasonable because of the prior provided by our perception system. How this prior is systematically brought into use to induce concepts is the trick, and that would then include work on perception, not just abstract reasoning. I don’t think any of the existing artificial perception systems have all the right priors or provide right amount of control.

The above example shows that some ideas of “objectness”, “contour continuation” are expected to be present in the system for ARC to generalize. Other examples also use the ideas of “inside/outside”, “closure” etc. Those priors have to come from the perceptual system.

But then why is occlusion not included? What about transparency? Who determines which perceptual generalizations are OK and which are not? None of these are specified in the ARC challenge, and the decisions seem to be somewhat arbitrary.

Have a look at the example below. This is about changing the color of the blue crosses to the one in the target. Note that humans will be still be able to solve the task even of the other crosses were bigger in size, or rendered in different way, only by the outline for example. Even if they looked nothing like cross, but the pixels spelled out the word ‘c.r.o.s.s’., humans who can read English would be able to solve it. Which of these generalizations are within the domain of ARC, and which are not?

Note that developing a perception system with the right priors, the right amount of control, and with appropriate world dynamics modeled in it is still a research challenge. What looks like pure abstract reasoning often depends on inheriting generalizations from such a system.

Like other tests, ARC is necessary, not sufficient.

None of these problems would matter if ARC was a sufficient test, but just like Tabletop world or Ravens Progressive Matrices, it is just a necessary test. If that is so, why not make a series of systematic necessary condition tests instead of a test that is not systematic?

Parting thoughts

When all the excitement is around LLMs, it is refreshing to see the focus on abstract reasoning, and non-linguistic concept induction. I do believe this is an exciting and important problem, and ARC prize brings attention to it. However, I think the challenge itself is hobbled by some of the problems I described above — lack systematicity, entanglement with perceptual priors without clear acknowledgement or delineation, etc.

Some of these problems might be why there hasn’t been verifiable systematic progress on this problem despite ARC being out for a while and having grabbed attention before. Maybe, it is possible to divide ARC into different systematic domains, which are then tackled. Many papers select subset of ARC problems to work on, but these often result in dead ends with no path to solving the other problems in the challenge. However, with renewed enthusiasm and a bigger prize money, it is also is possible that we make progress despite those problems. Hopefully the perspective from our prior work helps:

Further reading

Science Robotics article (open access): Zero-shot task-transfer on robots by inducing concepts as cognitive programs

A thought is a program . And old blog from Vicarious at the time of publication of the above paper.

Cognitive programs: software for attention’s executive.

"Pure reason you Kan’t"

This is the best part. :P

“Concepts as cognitive programs”, I like this phrase, I think the ARC challenge is really going to be an induction challenge.